Legislation is gradually catching up to the rapid speed of technical advancement, which has made a great deal of previously unthinkable things conceivable.

As a result of the General Data Protection Regulation's adoption in Europe, privacy laws are becoming more stringent globally.

Despite the absence of a similarly broad US counterpart, several states are seeking to enact their own laws, such as the California Consumer Privacy Act (CCPA), rather than waiting for changes to occur at the federal level.

China is also working to implement the Personal Information Protection Law (PIPL), which will restrict what businesses can do with personal data they don't have permission to process.

People in the West frequently have the misconception that data privacy is not regarded as a protected asset in China.

From the perspective of governance, the main issues revolve on ensuring that we have authorization to use the data we wish to gather and compliance with any broad rules like GDPR, CCPA, or PIPL that are in existence in the territories we're operating in.

Courts and legislators are becoming more conscious of the onerous needs of reading and comprehending reams of documents linked to whatever services we choose to use, so businesses can no longer hide behind T&Cs.

Simply stated, they know that no one will read them, therefore the fact that you have waivers and disclaimers buried deep inside the tiny print is less and less likely to provide you a strong enough defense if someone thinks you are using their data for a purpose for which you are not authorized.

It is no longer sufficient that data subjects have not yet exercised their right to opt out of having their personal information processed for certain purposes in order to be in compliance with various regulations, including GDPR.

Hello Barbie, a range of natural-language-powered talking Barbie dolls manufactured by Mattel, had to be discontinued because of concerns that children's data was being processed and stored without their legal parents' permission.

The question of IP ownership and rights must also be taken into account.

Whether you are using algorithms with a license or because you created and own them completely, you must be certain that you have the right to do so.

You should also be mindful of the legal standing of any output that comes from your AI and machine learning.

As an example, algorithms exist that can produce creative pieces of art, poetry, and journalism, as we've seen before.

The algorithms behind this were developed after researching millions of other works of literature, poetry, and journalism.

- Can we think of the AI as merely drawing inspiration from its training data, much as Van Gogh did when drawing inspiration from his contemporaries and other great artists?

- Or is it more likely that the AI is dissecting already-created pieces of art and utilizing them to make new ones?

This hasn't yet, as far as I know, been put to the test in a court of law, but it's definitely something to keep in mind as a potential obstacle down the road.

Safeguarding your Data.

The criteria to keep your data secure from unintentional loss or malicious data breach are at the center of data protection.

Both of these may have legal repercussions under regulations like the GDPR, but they are also crucial factors in any data governance strategy that deals with personal data.

Companies that handle personal data have the most governance requirements.

This is likely to refer to any businesses who are serious about their data strategy, since this is often the most valuable data.

But there are methods to lessen your responsibilities, and one of the best is to use a data reduction technique.

In the past, it would have been considered strategically sound to gather every single item that is possible to gather in case we discover a purpose for it (Amazon's Jeff Bezos is credited as saying, "We never throw anything away").

Those times are long gone as a result of the enormous number of data nowadays as well as the increasing quantity of law and regulation.

According to the GDPR, gathering personal information shall only be "minimum essential" to carry out the tasks for which you have been granted permission.

Steps may be taken to break the connection, producing data that is still relevant for analysis but (ideally) doesn't meet the definition of "personal data," which is any data that can be connected to a live person.

I use the word "ideally" since it has repeatedly been shown that many of the methods used to anonymize or de-identify data aren't entirely reliable.

Even if you exclude all names from your records, a someone with the necessary skills and resources could be able to link your data to particular people.

For instance, if you have information on every resident of a street but no names, you may be able to pinpoint a specific person based on their age range, work status, or marital status - especially if it's a very small street.

Your non-personal information becomes personal information when it is in this manner associated with a living person, for whom you are accountable.

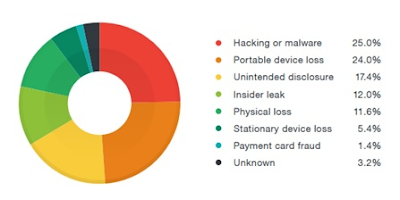

Breach of Data.

The danger of data breaches to company is becoming more and more common.

Businesses that experienced data breaches in 2019 paid an average cost of $8.19 million.

But in addition to the significant financial cost, there may be reputational effects that may quickly put an end to a corporation.

However, there are some indications that firms are becoming better at avoiding them, as the number somewhat decreased between 2018 and 2020.

They are growing larger and more frequent. Nevertheless, they pose a significant risk, and any governance approach should include measures to reduce the risks.

Authorization is a key concept to include in your approach.

This is a definite permissioning system that establishes who has access to each specific collection of data.

Only those who need access to it in order to complete the task for which the data is needed should have access to it.

The usage of encryption is another. If data is encrypted, somebody attempting to steal it would find it much less valuable.

As with any security measures, there will always be some friction when used for proper reasons, but there are now several systems that efficiently manage encryption and decryption on the fly, in a manner that is imperceptible to the data customer.

Both the HTTPS protocol for transmitting online pages to your browser and the automated encryption employed by messaging services like WhatsApp are excellent examples of this.

Your data may be encrypted just while it is being held in cold storage, encrypted only when it is being transported, encrypted constantly, or encrypted continuously and decrypted only when it is being utilized.

Homomorphic encryption is another another choice that may be taken into account.

Even the analytical algorithms cannot "see" the unencrypted data since the data is encrypted in this case so that it may be analyzed while staying in its encrypted form.

People with the necessary rights may even change the data in the cloud without exposing the unencrypted data to the cloud servers.

Homomorphic encryption is somewhat constrained by the computing power you have at your disposal.

Partially homomorphic encryption and relatively homomorphic encryption are the two types of homomorphic encryption now in use, and both of them restrict the ability to alter the encrypted data.

Although "fully homomorphic encryption" is not constrained in this manner, it does demand a lot of processing power and will unavoidably take longer.

There is a trade-off between speed and security in any encryption.

Masking and tokenization are two further methods that may be used to de-identify data.

Masking is the process of obscuring sensitive data from the unencrypted data with similar-type data while maintaining the integrity of other parts.

This may include, for example, changing every city field's data to reflect a different city.

The data is only visible to those with the proper permissions, yet it is nevertheless helpful for many applications.

Tokenization is similar, except it substitutes randomized, anonymous tokens for important or delicate portions of the dataset.

Contrary to encryption, there is no mathematical method to reconstruct the original data from the concealed data (which is possible with huge amounts of computing power with many forms of encryption).

This is due to the fact that the tokens are chosen at random rather than being calculated from the initial data.

Additionally, although tokenization (and masking) are frequently performed to certain fields inside the record, encryption is typically applied to the whole record.

In conclusion, it's important to keep in mind that data security is a highly specialized topic, thus you should definitely seek the advice of specialists when creating your data plan.

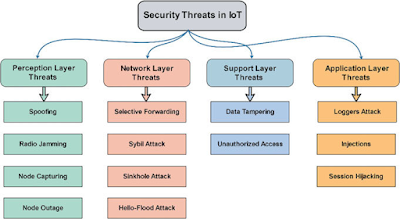

Threats posed by IoT.

Hackers now have more options for attack due to the ever-growing number of linked gadgets and "things" we've let into our personal and professional life.

A recent study found that 10 million IoT devices contain security flaws that might be used to get unauthorized access to data.

Consider each networked linked device as a "door" into your business that has to be maintained closed and protected from intruders, just like any other door.

Even your grandma definitely knows the value of firewalls and virus scanners on her home computer, but it's unlikely she's aware of the possible risk presented by her microwave, refrigerator, or smart toothbrush at the moment.

Simply put, more gadgets equal more potential points of entry for hackers after your data.

Even while it may not be immediately clear why a hacker would want to get access to your smart refrigerator, the basic plan is to exploit it to gain access to other devices where the actual jackpot would be located.

Attacks might seem as fictitious faults or requests for patches or upgrades, which provide a back door for the attacker into your network.

Sometimes they'll just try to get you to phone a "customer service" number so they can try to scam you out of money! It has been shown that connected toys, automobiles, and even medical equipment are all attackable, and new flaws are discovered daily, as rapidly as manufacturers can fix them.

Given this, it should be obvious that any business working with IoT-related devices must take security seriously.

As many IoT devices are often exploited in this manner, changing any default passwords or login credentials is a crucial practical first step.

Make sure your customers are encouraged to do the same if you provide IoT devices.

It's another another situation in which adopting a "minimization" philosophy might be beneficial.

Do you really need your equipment to be able to connect and interface with a large number of other devices when there may not be a clear benefit to the user in having them talk to each other? Of course, most devices need to interface with a smartphone or computer app so they can be controlled by the user.

Another essential element of any data governance policy is an audit of all IoT and connected devices.

Make sure you know precisely "what is talking to what" and "what they are talking about" on your network.

Find Jai on Twitter | LinkedIn | Instagram

References And Further Reading

- Roff, HM and Moyes, R (2016) Meaningful Human Control, Artificial Intelligence and Autonomous Weapons, Briefing paper prepared for the Informal Meeting of Experts on Lethal Autonomous Weapons Systems, UN Convention on Certain Conventional Weapons, April, article36.org/wp-content/uploads/2016/04/MHC-AI-and-AWS-FINAL.pdf (archived at https://perma.cc/LE7C-TCDV)

- Wakefield, J (2018) The man who was fired by a machine, BBC, 21 June, www.bbc.co.uk/news/technology-44561838 (archived at https://perma.cc/KWD2-XPGR)

- Kande, M and Sönmez, M (2020) Don’t fear AI. It will lead to long-term job growth, WEF, 26 October, www.weforum.org/agenda/2020/10/dont-fear-ai-it-will-lead-to-long-term-job-growth/ (archived at https://perma.cc/LY4N-NCKM)

- The Royal Society (2019) Explainable AI: the basics, November, royalsociety.org/-/media/policy/projects/explainable-ai/AI-and-interpretability-policy-briefing.pdf (archived at https://perma.cc/XXZ9-M27U)

- Hao, K (2019) Training a single AI model can emit as much carbon as five cars in their lifetimes, MIT Technology Review, 6 June, www.technologyreview.com/2019/06/06/239031/training-a-single-ai-model-can-emit-as-much-carbon-as-five-cars-in-their-lifetimes/ (archived at https://perma.cc/AYN9-C8X9)

- Najibi, A (2020) Racial discrimination in face recognition technology, SITN Harvard University, 24 October, sitn.hms.harvard.edu/flash/2020/racial-discrimination-in-face-recognition-technology/ (archived at https://perma.cc/F8TC-RPHW)

- McDonald, H (2019) AI expert calls for end to UK use of ‘racially biased’ algorithms, Guardian, 12 December, www.theguardian.com/technology/2019/dec/12/ai-end-uk-use-racially-biased-algorithms-noel-sharkey (archived at https://perma.cc/WX8L-YEK8)

- Dastin, J (2018) Amazon scraps secret AI recruiting tool that showed bias against women, Reuters, 11 October, www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G (archived at https://perma.cc/WYS6-R7CC)

- Johnson, J (2021) Cyber crime: number of breaches and records exposed 2005–2020, Statista, 3 March, www.statista.com/statistics/273550/data-breaches-recorded-in-the-united-states-by-number-of-breaches-and-records-exposed/ (archived at https://perma.cc/BQ95-2YW2)

- Palmer, D (2021) These new vulnerabilities put millions of IoT devices at risk, so patch now, ZDNet, 13 April, www.zdnet.com/article/these-new-vulnerabilities-millions-of-iot-devives-at-risk-so-patch-now/ (archived at https://perma.cc/RM6B-TSL3)