AI really has the power to transform business and larger society in ways that are now unthinkable.

The basic shift we're seeing is that rather than only following predetermined, programmed instructions, robots are now able to make their own judgments.

Computers will increasingly be utilized for activities that involve decision-making, rather than just doing repetitive chores like applying formulae to numbers in a spreadsheet (which they currently excel at).

This will inevitably contain choices affecting people's lives.

The fact that Amazon is known to have fired human workers using AI algorithms.

In such instance, it was discovered that robots handled the whole process of overseeing and firing employees.

Beyond that, we are aware that AI has been used to the creation of weapons to produce tools that can decide whether to murder people (currently the UN states that it is unacceptable for them to be allowed to do this ).

What about circumstances in which robots are forced to kill?

How should a self-driving vehicle behave while deciding whether to collide with a pedestrian or a brick wall is a typical scenario used when debating AI ethics (potentially injuring its own driver).

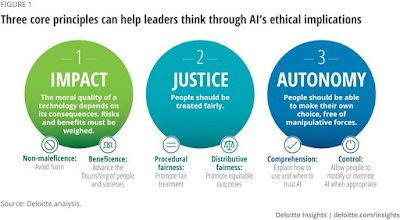

These are extreme instances, but they are pertinent to any ethical decisions you would need to make if you're in charge of deploying AI-powered, automated decision making at any firm.

The most promising AI technologies all have extremely strong potential benefits, but if they are handled improperly, they might also have very strong potential drawbacks.

In medical imaging, machine vision can identify malignant growths, but it may also be employed by authoritarian governments to monitor their population.

It is now simpler than ever to communicate with robots, and natural language processing can scan social media postings for indications that someone could be suicidal or depressed.

By pretending to be someone else, it may also be utilized to plan frauds and phishing assaults.

And although we may use intelligent robots to investigate the ocean below or the universe, aid the disabled, and clean up the environment, they are also being created for use as lethal weapons.

It's crucial that we consider where our own implementations of AI fall on this spectrum.

Can we be certain that a concept like, for instance, the data-driven customer segmentation in our ice cream parlor would lie on the correct side of the moral divide?

Hopefully no one reading this book is intending on using it to harm anybody.

In such case, concerns about permission and privacy would be the main issues.

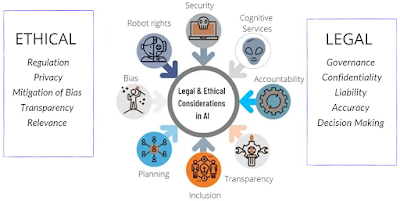

It follows that it is unethical to gather, utilize, or distribute anyone's personal data without their consent if we recognize that individuals have a right to privacy.

Therefore, getting agreement for any work we undertake with clients' private data is a crucial first step.

Of course, this is now a legal necessity in many areas.

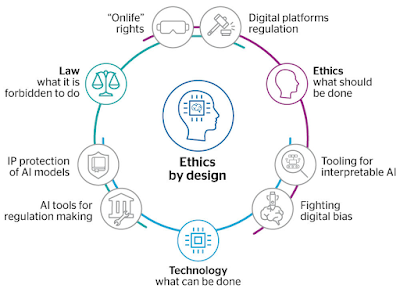

However, when it comes to the governance of your data or AI initiatives, ethics and law must both be handled separately.

For instance, it's unlikely that Amazon's automatic termination of workers is against the law.

On the other hand, it's pretty simple to construct a compelling case for it being immoral since it includes giving robots the authority to make decisions that may have a significant influence on people's lives.

Furthermore, it's far from certain that the people involved ever agreed to give the robots this level of control over their lives.

Even if you are just using computers to execute processes more effectively rather than to make such life-altering choices, caution must be taken.

In 2018, IT contractor Ibrahim Diallo discovered he couldn't enter his Los Angeles workplace because his security permit had been suspended.

After gaining entry, he was unable to enter into any of the systems he needed to use for his profession, and soon after that, security personnel showed up to take him out of the facility.

Also terminated was his salary.

His boss and any other senior employees were unaware of what had occurred, but after being compelled to work from home for three weeks, they discovered that an HR error had incorrectly indicated that he had been fired.

At that moment, automatic systems started running and there was no way to manually override them.

He had to quit the organization and look for employment elsewhere because of the stress and his supervisors' inability to take any action.

What happens if workers are dismissed but not because of AI?

According to some sources, IBM's staff headcount decreased by 25% between 2012 and 2019, and many of the tasks performed by individuals who departed were replaced by machines.

There are undoubtedly compelling arguments that AI creates jobs rather than eliminates them for human workers.

In addition, the types of jobs that it creates, such as those for engineers, scientists, data storytellers, and translators, are probably more satisfying than the jobs that are lost.

According to the World Economic Forum, 85 million human jobs would have been replaced by automation by 2025.

On the other hand, 97 million new jobs will be created within the same time period as a result of the rise in AI and other sophisticated, automated technologies.

Does it imply that, in terms of replacing human labor, automation is not ethically problematic? Well, not really, I'd say!

This implies that you must carefully examine how your own AI, automation, and data projects will affect your human workforce.

- Is there a possibility that someone may develop redundancy?

- If so, might they be given additional jobs in accordance with their talents and responsibilities, but with an emphasis on using the human traits that computers still lack, such as creativity, empathy, and communication?

- Most importantly, is there a chance that they may lose importance regardless of whether you use your initiative?

- If you didn't implement it, for instance, would it affect your company's ability to compete in the market, making it impossible for you to continue paying them?

Further reason for worry comes from an AI technique known as a generative adversarial network (GAN), which enables the creation of uncannily realistic representations of actual individuals as well as text and language.

This is what causes the "deepfake" pictures that have proliferated online.

This has the potential to cause a great deal of harm to both individuals and society.

For instance, it is very easy to find fake pornographic images of just about any celebrity online.

It has also been used for political purposes to create images of politicians and world leaders saying or acting in ways that they would never do in real life.

This may exacerbate the issues brought on by false information and the simplicity with which false or misleading information may be disseminated online, thereby undermining the democratic process.

The generation of "synthetic data," and text-to-image systems, which essentially allow us to make pictures by describing what they look like, are just two of the many valid and totally ethical reasons a corporation would wish to employ GAN technology.

The ethical risk is that they may also be used to produce anything that aids in the dissemination of incorrect information or results in the misrepresentation of specific persons or groups of people.

Once again, if you use this technology, you need to be aware of the hazards and know where your beliefs fall in terms of ethics.

From an ethical perspective, recommendation technology could appear like a secure use of AI, but there are also risks.

We are given additional stuff to look at that is comparable to the content we have already looked at through systems that provide content, like Facebook's algorithms.

This might result in "filter bubbles"; for instance, if you start reading the many conspiracy theory websites discussing topics like whether 9/11 was a "inside job" or the more current QAnon conspiracy, you will soon notice that similar items are starting to appear in your news feed.

The same holds true whether you lean heavily to the left or right and often read news articles that support their viewpoints.

The information you have accessible to support your own thoughts and beliefs may eventually become imbalanced if you only see tales that match that certain narrative.

Again, this might have extremely negative effects on the democratic process by creating the echo-chamber effect.

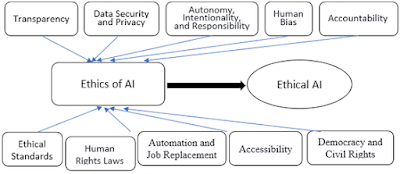

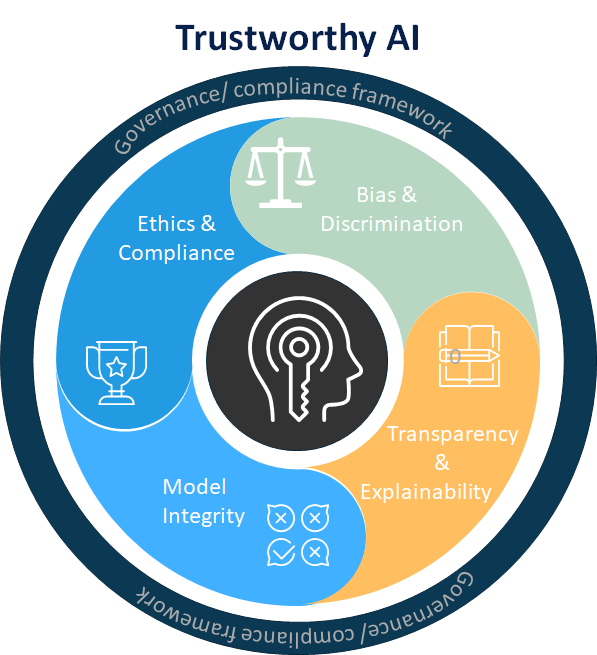

The need of transparency is still another problem.

If you're going to place humans in a situation where robots control their lives, you should at the very least be able to describe how the machines make decisions.

We've previously discussed the "black box" issue with AI, which arises from the fact that the algorithms may get so complicated that it is extremely challenging for humans to fully comprehend why they function in a certain way.

This is made worse by the fact that some AI is purposefully opaque in order to deter easy duplication by those who sell it.

Ethics are a personal matter that each individual must consider for himself, but you could discover that you agree with the majority of people who believe that actions that have an influence on people's lives should be explicable.

It's interesting to note that some people think AR and VR might be useful in this situation by enabling us to study and question algorithmic operations in ways that aren't achievable by merely looking at computer code on a screen or even 2D representations of the operations.

The OECD's Principles for AI include transparency as one of its primary needs (more on this below).

Ethical issues are also brought up by AI's effects on the environment.

According to estimates, the amount of carbon produced by training certain NLP machine learning models is equivalent to the yearly carbon emissions of 17 Americans.

All that computing power uses quite a lot of energy.

This is equivalent to around 283.048 kg (626,000 pounds) of CO2.

Due to their offsetting methods, some AI providers, like Google, claim that they are carbon neutral, while others, like Microsoft, won't be until years or decades from now.

Of course, AI has the ability to increase operational efficiency in many sectors, which may reduce the effect on the environment.

For instance, both the network-wide strategies utilized by power and utility companies and the smart home thermostats are focused on utilizing energy more effectively and reducing emissions.

There is no clear-cut answer to the question "Is AI good or bad for the environment?" because it depends on individual applications, which means that each application needs to be evaluated on its own merit for environmental impact.

This is similar to the ethics of particular AI capabilities like facial recognition and natural language, and the implications on jobs.

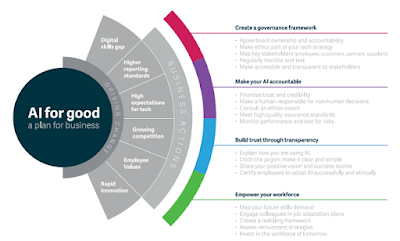

Given all the worries, it shouldn't be shocking that the AI business and larger society organizations are beginning to take the question of advanced technology ethics seriously.

The Organization for Economic Co-operation and Development (OECD) has released its AI Principles, which include the following:

AI development should be done in a way that respects the law, human rights, democracy, and diversity; it should be transparent to allow it to be understood and challenged; and organizations should be held responsible for the results of their AI initiatives.

Google, too, has put forth its own standards of ethical AI usage.

These include demands that AI apps be ethical, impartial, trustworthy, and considerate of users' privacy.

Additionally, the business has said that it would seek to prevent hazardous or abusive implementations of AI.

Additionally, Microsoft has a program called AI for Good that encourages the use of AI to address issues related to the environment, society, healthcare, and humanitarianism.

The underuse of AI is my last point for this section, which I really believe needs thorough study.

Simply said, there are numerous circumstances when avoiding AI is a bad idea from an ethical standpoint.

- Doesn't that imply we have an ethical obligation to employ AI if there are problems in our business, society, or the world at large that might be solved with it but we choose not to - possibly because of other factors we've covered here, such the effect on the environment or transparency concerns?

- Is it appropriate, for instance, to use facial recognition technology to locate someone who may be in danger even if they haven't given their consent to be followed in this way?

Police have used it to locate persons who have gone missing in China and the UK.

My suggestion is that any company intending to use AI take the step of establishing some kind of "ethical committee," similar to what Google and Microsoft have done.

Of course, depending on the size of the company, your council's scope, size, and resources will vary.

However, it's crucial to have someone in charge of taking into account all the concerns brought up here and how they affect our activities.

Find Jai on Twitter | LinkedIn | Instagram

References And Further Reading

- Roff, HM and Moyes, R (2016) Meaningful Human Control, Artificial Intelligence and Autonomous Weapons, Briefing paper prepared for the Informal Meeting of Experts on Lethal Autonomous Weapons Systems, UN Convention on Certain Conventional Weapons, April, article36.org/wp-content/uploads/2016/04/MHC-AI-and-AWS-FINAL.pdf (archived at https://perma.cc/LE7C-TCDV)

- Wakefield, J (2018) The man who was fired by a machine, BBC, 21 June, www.bbc.co.uk/news/technology-44561838 (archived at https://perma.cc/KWD2-XPGR)

- Kande, M and Sönmez, M (2020) Don’t fear AI. It will lead to long-term job growth, WEF, 26 October, www.weforum.org/agenda/2020/10/dont-fear-ai-it-will-lead-to-long-term-job-growth/ (archived at https://perma.cc/LY4N-NCKM)

- The Royal Society (2019) Explainable AI: the basics, November, royalsociety.org/-/media/policy/projects/explainable-ai/AI-and-interpretability-policy-briefing.pdf (archived at https://perma.cc/XXZ9-M27U)

- Hao, K (2019) Training a single AI model can emit as much carbon as five cars in their lifetimes, MIT Technology Review, 6 June, www.technologyreview.com/2019/06/06/239031/training-a-single-ai-model-can-emit-as-much-carbon-as-five-cars-in-their-lifetimes/ (archived at https://perma.cc/AYN9-C8X9)

- Najibi, A (2020) Racial discrimination in face recognition technology, SITN Harvard University, 24 October, sitn.hms.harvard.edu/flash/2020/racial-discrimination-in-face-recognition-technology/ (archived at https://perma.cc/F8TC-RPHW)

- McDonald, H (2019) AI expert calls for end to UK use of ‘racially biased’ algorithms, Guardian, 12 December, www.theguardian.com/technology/2019/dec/12/ai-end-uk-use-racially-biased-algorithms-noel-sharkey (archived at https://perma.cc/WX8L-YEK8)

- Dastin, J (2018) Amazon scraps secret AI recruiting tool that showed bias against women, Reuters, 11 October, www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G (archived at https://perma.cc/WYS6-R7CC)

- Johnson, J (2021) Cyber crime: number of breaches and records exposed 2005–2020, Statista, 3 March, www.statista.com/statistics/273550/data-breaches-recorded-in-the-united-states-by-number-of-breaches-and-records-exposed/ (archived at https://perma.cc/BQ95-2YW2)

- Palmer, D (2021) These new vulnerabilities put millions of IoT devices at risk, so patch now, ZDNet, 13 April, www.zdnet.com/article/these-new-vulnerabilities-millions-of-iot-devives-at-risk-so-patch-now/ (archived at https://perma.cc/RM6B-TSL3)

- Roff, HM and Moyes, R (2016) Meaningful Human Control, Artificial Intelligence and Autonomous Weapons, Briefing paper prepared for the Informal Meeting of Experts on Lethal Autonomous Weapons Systems, UN Convention on Certain Conventional Weapons, April, article36.org/wp-content/uploads/2016/04/MHC-AI-and-AWS-FINAL.pdf (archived at https://perma.cc/LE7C-TCDV)

- Wakefield, J (2018) The man who was fired by a machine, BBC, 21 June, www.bbc.co.uk/news/technology-44561838 (archived at https://perma.cc/KWD2-XPGR)

- Kande, M and Sönmez, M (2020) Don’t fear AI. It will lead to long-term job growth, WEF, 26 October, www.weforum.org/agenda/2020/10/dont-fear-ai-it-will-lead-to-long-term-job-growth/ (archived at https://perma.cc/LY4N-NCKM)

- The Royal Society (2019) Explainable AI: the basics, November, royalsociety.org/-/media/policy/projects/explainable-ai/AI-and-interpretability-policy-briefing.pdf (archived at https://perma.cc/XXZ9-M27U)

- Hao, K (2019) Training a single AI model can emit as much carbon as five cars in their lifetimes, MIT Technology Review, 6 June, www.technologyreview.com/2019/06/06/239031/training-a-single-ai-model-can-emit-as-much-carbon-as-five-cars-in-their-lifetimes/ (archived at https://perma.cc/AYN9-C8X9)

- Najibi, A (2020) Racial discrimination in face recognition technology, SITN Harvard University, 24 October, sitn.hms.harvard.edu/flash/2020/racial-discrimination-in-face-recognition-technology/ (archived at https://perma.cc/F8TC-RPHW)

- McDonald, H (2019) AI expert calls for end to UK use of ‘racially biased’ algorithms, Guardian, 12 December, www.theguardian.com/technology/2019/dec/12/ai-end-uk-use-racially-biased-algorithms-noel-sharkey (archived at https://perma.cc/WX8L-YEK8)

- Dastin, J (2018) Amazon scraps secret AI recruiting tool that showed bias against women, Reuters, 11 October, www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G (archived at https://perma.cc/WYS6-R7CC)

- Johnson, J (2021) Cyber crime: number of breaches and records exposed 2005–2020, Statista, 3 March, www.statista.com/statistics/273550/data-breaches-recorded-in-the-united-states-by-number-of-breaches-and-records-exposed/ (archived at https://perma.cc/BQ95-2YW2)

- Palmer, D (2021) These new vulnerabilities put millions of IoT devices at risk, so patch now, ZDNet, 13 April, www.zdnet.com/article/these-new-vulnerabilities-millions-of-iot-devives-at-risk-so-patch-now/ (archived at https://perma.cc/RM6B-TSL3)