An opinionated look at our trade

Introduction

There is general consensus that the software development process is imperfect, sometimes grossly so, for any number of human (management, skill, communication, clarity, etc) and technological (tooling, support, documentation, reliability, etc) reasons. And yet, when it comes to talking about software development, we apply a variety of scientific/formal terms:

- Almost every college / university has a Computer Science curriculum.

- We use terms like "software engineer" on our resumes.

- We use the term "art" (as in "art of software development") to acknowledge the creative/creation process (the first volume of Donald Knuth's The Art of Computer Programming was published in 1968)

- There is even a "Manifesto for Software Craftsmanship" created in 2009 (the "Further Reading" link is a lot more interesting than the Manifesto itself.)

The literature on software development is full of phrases that talk about methodologies, giving the ignorant masses, newbie programmers, managers, and even senior developers the warm fuzzy illusion that there is some repeatable process to software development that warrants words like "science" and "engineer." Those who recognize the loosey-goosey quality of those methodologies probably feel more comfortable describing the software development process as an "art" or a "craft", possibly bordering on "witchcraft."

The Etymology of the Terms we Use

By way of introduction, we'll use the etymology of these terms as a baseline of meaning.

Science

"what is known, knowledge (of something) acquired by study; information;" also "assurance of knowledge, certitude, certainty," from Old French science "knowledge, learning, application; corpus of human knowledge" (12c.), from Latin scientia "knowledge, a knowing; expertness," from sciens (genitive scientis) "intelligent, skilled," present participle of scire "to know," probably originally "to separate one thing from another, to distinguish," related to scindere "to cut, divide," from PIE root *skei- "to cut, to split" (source also of Greek skhizein "to split, rend, cleave," Gothic skaidan, Old English sceadan "to divide, separate;" see shed (v.)).

From late 14c. in English as "book-learning," also "a particular branch of knowledge or of learning;" also "skillfulness, cleverness; craftiness." From c. 1400 as "experiential knowledge;" also "a skill, handicraft; a trade." From late 14c. as "collective human knowledge" (especially "that gained by systematic observation, experiment, and reasoning). Modern (restricted) sense of "body of regular or methodical observations or propositions concerning a particular subject or speculation" is attested from 1725; in 17c.-18c. this concept commonly was called philosophy. Sense of "non-arts studies" is attested from 1670s.

Engineer

mid-14c., enginour, "constructor of military engines," from Old French engigneor "engineer, architect, maker of war-engines; schemer" (12c.), from Late Latin ingeniare (see engine); general sense of "inventor, designer" is recorded from early 15c.; civil sense, in reference to public works, is recorded from c. 1600 but not the common meaning of the word until 19c (hence lingering distinction as civil engineer). Meaning "locomotive driver" is first attested 1832, American English. A "maker of engines" in ancient Greece was a mekhanopoios.

Art

early 13c., "skill as a result of learning or practice," from Old French art (10c.), from Latin artem (nominative ars) "work of art; practical skill; a business, craft," from PIE *ar-ti- (source also of Sanskrit rtih "manner, mode;" Greek arti "just," artios "complete, suitable," artizein "to prepare;" Latin artus "joint;" Armenian arnam "make;" German art "manner, mode"), from root *ar- "fit together, join" (see arm (n.1)).

In Middle English usually with a sense of "skill in scholarship and learning" (c. 1300), especially in the seven sciences, or liberal arts. This sense remains in Bachelor of Arts, etc. Meaning "human workmanship" (as opposed to nature) is from late 14c. Sense of "cunning and trickery" first attested c. 1600. Meaning "skill in creative arts" is first recorded 1610s; especially of painting, sculpture, etc., from 1660s. Broader sense of the word remains in artless.

Craft

Old English cræft (West Saxon, Northumbrian), -creft (Kentish), originally "power, physical strength, might," from Proto-Germanic *krab-/*kraf- (source also of Old Frisian kreft, Old High German chraft, German Kraft "strength, skill;" Old Norse kraptr "strength, virtue"). Sense expanded in Old English to include "skill, dexterity; art, science, talent" (via a notion of "mental power"), which led by late Old English to the meaning "trade, handicraft, calling," also "something built or made." The word still was used for "might, power" in Middle English.

Methdology (Method)

And for good measure:

early 15c., "regular, systematic treatment of disease," from Latin methodus "way of teaching or going," from Greek methodos "scientific inquiry, method of inquiry, investigation," originally "pursuit, a following after," from meta- "after" (see meta-) + hodos "a traveling, way" (see cede). Meaning "way of doing anything" is from 1580s; that of "orderliness, regularity" is from 1610s. In reference to a theory of acting associated with Russian director Konstantin Stanislavsky, it is attested from 1923.

Common Themes

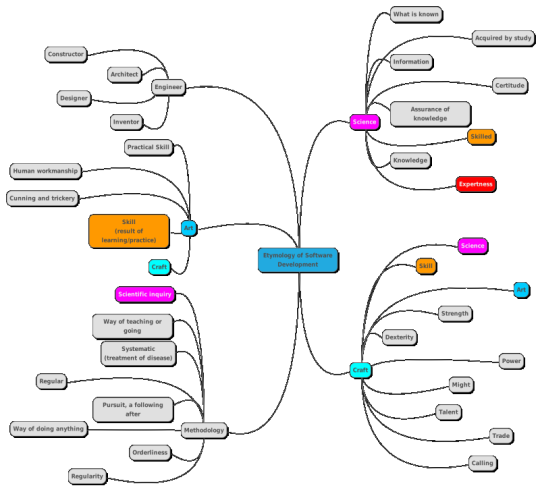

Looking at the etymology of these words reveals some common themes (created using the amazing online mind-mapping tool MindMup):

What associations do we glean from this?

- Science: Skill

- Art: Craft, Skill

- Craft: Art, Skill, Science

- Methodology: Science

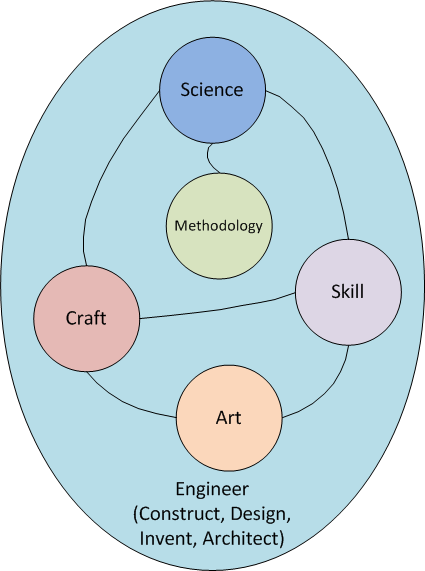

Interestingly, the term "engineer" does not directly associate with the etymology of science, art, craft, or methodology, but one could say those concepts are facets of "engineering" (diagram created with the old horse Visio):

This is arbitrary and doesn't mean that we're implying right away that software development is engineering, but it does give one an idea of what it might mean to call oneself a "software engineer."

The Scientific Method

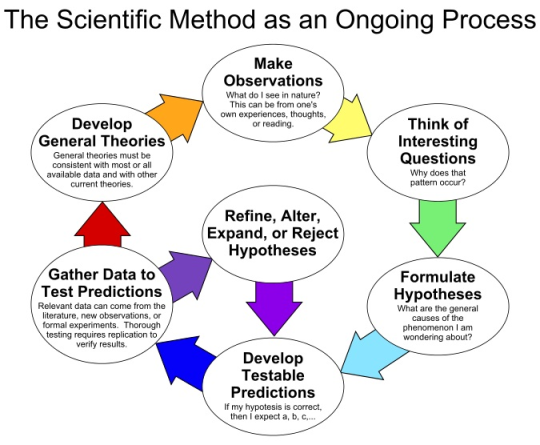

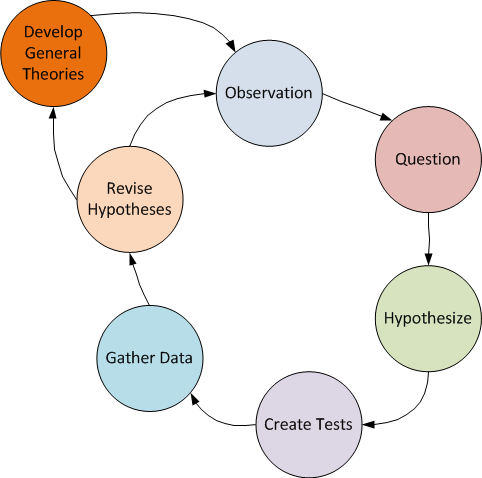

How many software developers could actually, off the top of their head, describe the scientific method? Here is one description:

The scientific method is an ongoing process, which usually begins with observations about the natural world. Human beings are naturally inquisitive, so they often come up with questions about things they see or hear and often develop ideas (hypotheses) about why things are the way they are. The best hypotheses lead to predictions that can be tested in various ways, including making further observations about nature. In general, the strongest tests of hypotheses come from carefully controlled and replicated experiments that gather empirical data. Depending on how well the tests match the predictions, the original hypothesis may require refinement, alteration, expansion or even rejection. If a particular hypothesis becomes very well supported a general theory may be developed.

Although procedures vary from one field of inquiry to another, identifiable features are frequently shared in common between them. The overall process of the scientific method involves making conjectures (hypotheses), deriving predictions from them as logical consequences, and then carrying out experiments based on those predictions. A hypothesis is a conjecture, based on knowledge obtained while formulating the question. The hypothesis might be very specific or it might be broad. Scientists then test hypotheses by conducting experiments. Under modern interpretations, a scientific hypothesis must be falsifiable, implying that it is possible to identify a possible outcome of an experiment that conflicts with predictions deduced from the hypothesis; otherwise, the hypothesis cannot be meaningfully tested.

We can summarize this as an iterative process of:

- Observation

- Question

- Hypothesize

- Create tests

- Gather data

- Revise hypothesis (go to step 3)

- Develop general theories and test for consistency with other theories and existing data

Software Development: Knowledge Acquisition and Prototyping / Proof of Concept

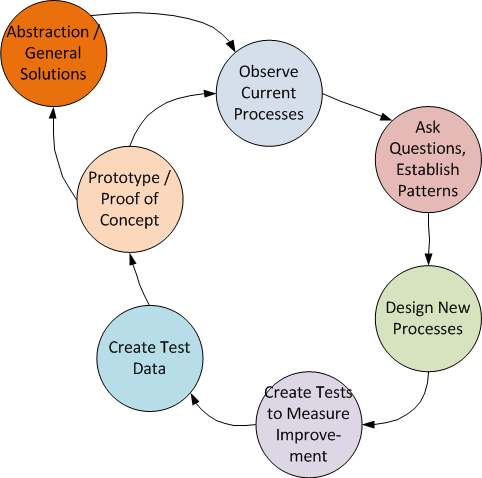

We can map the scientific method steps into the process of the software development that includes knowledge acquisition and prototyping:

- Observe current processes.

- Ask questions about current processes to establish patterns.

- Hypothesize how those processes can be automated and/or improved.

- Create some tests that measure success/failure and performance/accuracy improvement.

- Gather some test data for our tests.

- Create some prototypes / proof of concepts and revise our hypotheses as a result of feedback as to whether the new processes meet existing process requirements, are successful, and/or improve performance/accuracy.

- Abstract the prototypes into general solutions and verify that they are consistent with other processes and data.

Where we Fail

Software development actually fails at most or all of these steps. The scientific method is typically applied to the observation of nature. As with nature, our observations sometimes come to the wrong conclusions. Just as we observe the sun going around the earth and erroneously come up with a geocentric theory of the earth, sun, moon, and planets, our understanding of processes is fraught with errors and omissions. As with observing nature, we eventually hit the hard reality that what we understood about the process is incomplete or incorrect. One pitfall is that, unlike nature, we also have the drawback that as we're prototyping and trying to prove that our new software processes are better, the old processes are also evolving, so by the time we publish the application, it is, like a new car being driven off the lot, already obsolete.

Also, the software development process in general, and the knowledge acquisition phase in specific, typically doesn't determine how to measure success/failure and performance improvement/accuracy of an existing process, simply because we, as developers, lack the tools and resources to measure existing processes (there are exceptions of course.) We are, after all, attempting to replace a process, not just understand an existing, immutable process and build on that knowledge. How do we accurately measure the cost/time savings of a new process when we can barely describe an existing process? How do we compare the training required to efficiently utilize a new process when everyone has "cultural knowledge" of the old process? How do we overcome the resistance to new technology? Nature doesn't care if we improve on a biological process by creating vaccines and gene therapies to cure cancer, but secretaries and entrenched engineers alike do very much care about new processes that require new and different skills and potentially threaten to eliminate their jobs.

And frequently enough, the idea of how an existing process can be improved comes from incomplete (or completely omitted) observation and questions, but begins at step #3, imagining some software solution that "fixes" whatever concept the manager or CEO thinks can be improved upon, and yes, in many cases, the engineer thinks can be improved upon--we see this in the glut of new frameworks, new languages and forked GitHub repos that all claim to improve upon someone else's solution to a supposedly broken process. The result is a house of cards of poorly documented and buggy implementations. The result is why Stack Overflow exists.

As to the last step, abstracting the prototypes into general solutions, this is the biggest failure of all of software development -- re-use. As Douglas Schmidt wrote in C++ Report magazine, in 1999 (source):

Although computing power and network bandwidth have increased dramatically in recent years, the design and implementation of networked applications remains expensive and error-prone. Much of the cost and effort stems from the continual re-discovery and re-invention of core patterns and framework components throughout the software industry.

Note that he wrote 17 years ago (at the time of this article) and it still remains true today.

And:

Reuse has been a popular topic of debate and discussion for over 30 years in the software community. Many developers have successfully applied reuse opportunistically, e.g., by cutting and pasting code snippets from existing programs into new programs. Opportunistic reuse works fine in a limited way for individual programmers or small groups. However, it doesn't scale up across business units or enterprises to provide systematic software reuse. Systematic software reuse is a promising means to reduce development cycle time and cost, improve software quality, and leverage existing effort by constructing and applying multi-use assets like architectures, patterns, components, and frameworks.

Like many other promising techniques in the history of software, however, systematic reuse of software has not universally delivered significant improvements in quality and productivity.

The Silver Lining, Sort Of

Now granted, we can install a variety of flavors of Linux on everything from desktop computers to single board computers like the Raspberry Pi or Beaglebone. Cross-compilers let us compile and re-use code on multiple processors and hardware architectures. .NET Core is open source, running on Windows, Mac, and Linux. And script languages like Python, Ruby, and Javascript hide the low level compiled implementations in the subconscious mind of the program, enabling code portability for our custom applications. However, we are still stuck in the realm of, as Mr. Schmidt puts it: "Component- and framework-based middleware technologies." Using those technologies, we still have to "re-discover and re-invent" much of the glue that binds these components.

The Engineering Method

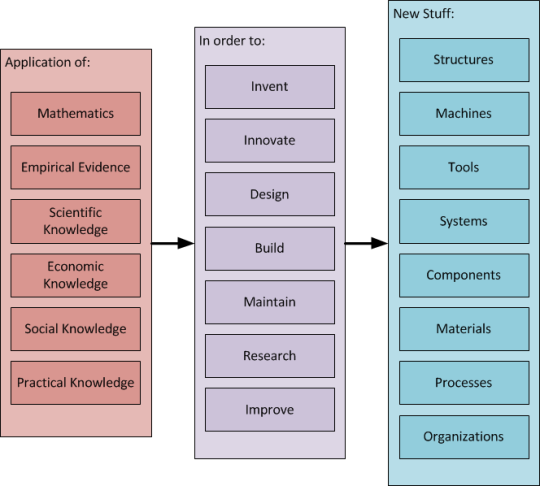

What is engineering?

Engineering is the application of mathematics, empirical evidence and scientific, economic, social, and practical knowledge in order to invent, innovate, design, build, maintain, research, and improve structures, machines, tools, systems, components, materials, processes and organizations. (source)

Seriously? And we have the hubris to call what we do software engineering?

"Application of" can only assume a deep understanding of whatever items in the list are applicable to what we're building.

"In order to" can only assume proficiency in the set of necessary skills.

And the "New stuff" certainly assumes that 1) it works, 2) it works well enough to be used, and 3) people will want it and know how to use it.

Engineering implies knowledge, skill, and successful adoption of the end product. To that end, there are formal methodologies that have been developed, for example, the Department of Energy Systems Engineering Methodology (SEM):

The Department of Energy Systems Engineering Methodology (SEM) provides guidance for information systems engineering, project management, and quality assurance practices and procedures. The primary purpose of the methodology is to promote the development of reliable, cost-effective, computer-based solutions while making efficient use of resources. Use of the methodology will also aid in the status tracking, management control, and documentation efforts of a project. (source)

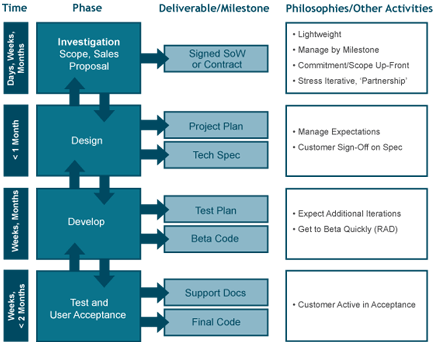

Engineering Process Overview

Notice some of the language:

- to promote the development of:

- reliable

- cost-effective

- computer-based solutions

And:

- the methodology will also aid in the:

- status tracking

- management control

- and documentation efforts

This actually sounds like an attempt to apply the scientific method successfully to software development.

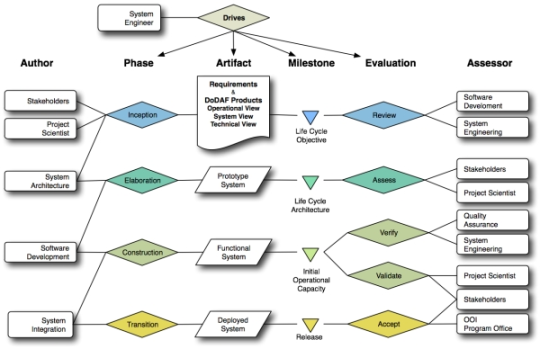

Another Engineering Model - the Spiral Development Model

There are many engineering models to choose from, but here is one more, the Spiral Development Model. It consists of Phases, Reviews, and Iterations (source):

- Phases

- Review Milestones Process

- Iterations

- Inception Phase

- Elaboration Phase

- Construction Phase

- Transition Phase

- Life Cycle Objectives Review

- Life Cycle Architecture Review

- Initial Operating Capability Review

- Product Readiness Review + Functional Configuration Audit

- Iteration Structure Description: Composition of an 8-Week Iteration

- Design Period Activities

- Development Period Activities

- Wrap-Up Period Activities

In each phase, the Custom Engineering Group (CEG) works closely with the customer to establish clear milestones to measure progress and success. (source)

This harkens a wee bit to Agile's "customer collaboration", but notice again the concepts of:

- a project plan

- a test plan

- supporting documentation

- customer sign-off of specifications

And the formality implied in:

- investigation

- design

- development

- test and user acceptance

True software engineering practically demands skilled professionals, whether they are developers, managers, technical writers, contract negotiators, etc. Certainly there is room for the people of all skill levels, but the structure of a real engineering team is such that "juniors" are mentored, monitored, and given appropriate tasks for their skill level and in fact, even "senior" people review each other's work.

Where we Fail

Instead, we have Agile Development and its Manifesto (source, bold is mine):

- Individuals and interactions over processes and tools

- Working software over comprehensive documentation

- Customer collaboration over contract negotiation

- Responding to change over following a plan

How can we fail to conclude that Agile Development is anything but engineering?

The Agile Manifesto appears to specifically de-emphasizes a scientific method for software development, and it also de-emphasizes the skills actual engineering requires of both software developers and managers, instead emphasizing an ill-defined psychological approach to software development involving people, interactions, collaboration, and flexibility. While these are necessary skills as well, they are not more important over the skills and formal processes that software development requires. In fact, Agile creates an artificial tension between the two sides of each of the bullet points above, often leading to an extreme adoption of the left side, for example, "the code is the documentation."

From real world experience, the Manifesto would be better written replacing the word "over" with "and":

- Individuals and interactions and processes and tools

- Working software and comprehensive documentation

- Customer collaboration and contract negotiation

- Responding to change and following a plan

However this approach is rarely embraced by over-eager coders that want to dive into a new-fangled technology and start coding, and it also isn't embraced by managers that view "over" as (incorrectly) reducing cost and time to market, vs. "and" as (incorrectly) increasing cost and time to market. As with any approach, a balance must be struck that is appropriate for the business domain and product to be delivered, but rarely does that conversation happen.

Regardless, Agile Development is not engineering. But is it a methodology?

Software Development Methodologies: Are They Really?

[I] chose the Macmillan online dictionary and found this:

Approach (noun): a particular way of thinking about or dealing with something

Philosophy: a system of beliefs that influences someone’s decisions and behaviour. A belief or attitude that someone uses for dealing with life in general

Methodology: the methods and principles used for doing a particular kind of work, especially scientific or academic research

Do we view whatever structure we impose on the software development process as an approach, a philosophy, or a methodology?

So-Called Methodologies

Wikipedia has a page here and here that lists dozens of philosophies and IT Knowledge Portal lists 13 software development methodologies here:

- Agile

- Crystal

- Dynamic Systems Development

- Extreme Programming

- Feature Drive Development

- Joint Application Development

- Lean Development

- Rapid Application Development

- Rational Unified Process

- Scrum

- Spiral (not the Spiral Development Model described earlier)

- System Development Life Cycle

- Waterfall (aka Traditional)

And even this list is missing so-called methodologies like Test Driven Development and Model Driven Development.

As methodology derives from Greek methodos: "scientific inquiry, method of inquiry, investigation", the definition of Methodology from Macmillan seems reasonable enough. Let's look at two of the most common (and seen as opposing) so-called methodologies, Waterfall and Agile.

Waterfall

The waterfall model is a sequential (non-iterative) design process, used in software development processes, in which progress is seen as flowing steadily downwards (like a waterfall) through the phases of conception, initiation, analysis, design, construction, testing, production/implementation and maintenance. The waterfall development model originates in the manufacturing and construction industries: highly structured physical environments in which after-the-fact changes are prohibitively costly, if not impossible. Because no formal software development methodologies existed at the time, this hardware-oriented model was simply adapted for software development..

The first formal description of the waterfall model is often cited as a 1970 article by Winston W. Royce, although Royce did not use the term waterfall in that article. Royce presented this model as an example of a flawed, non-working model; which is how the term is generally used in writing about software development—to describe a critical view of a commonly used software development practice.

The earliest use of the term "waterfall" may have been in a 1976 paper by Bell and Thayer.

In 1985, the United States Department of Defense captured this approach in DOD-STD-2167A, their standards for working with software development contractors, which stated that "the contractor shall implement a software development cycle that includes the following six phases: Preliminary Design, Detailed Design, Coding and Unit Testing, Integration, and Testing." (source)

Waterfall might actually be categorized as an approach, as there is no specific guidance for the six phases mentioned above -- they need to be part of the development cycle, but any specific scientific rigor applied to each of the phases is entirely missing. It is ironic that the process originates from manufacturing and construction industries, where there is often considerable mathematical analysis / modeling performed before construction begins. And certainly in the 1960's, construction of electronic hardware was expensive, and again, rigorous electrical circuit analysis using scientific methods would greatly mitigate the cost of after-the-fact changes.

Agile

There are a variety of diagrams for Agile Software Development, I've chosen one that maps somewhat to the "Scientific Method" above:

Ironically, IT Knowledge Portal describes Agile as "a conceptual framework for undertaking software engineering projects." It's difficult to understand how a conceptual framework can be described as a methodology. It seems at best to be an approach. More telling though is that the term "methodology" appears to have no foundation in "scientific inquiry, method of inquiry, investigation" when it comes to the software development process.

Software Development as a Craft

A craft is a pastime or a profession that requires particular skills and knowledge of skilled work. (source)

Historically, one's skill at a craft was described in three levels:

- Apprentice

- Journeyman

- Master Craftsman

When we look at software development as a craft, one of the advantages is that we move away from the Scientific Method and its emphasis on the natural world and physical processes. We also move away from the conundrum of applying an approach, philosophy, or methodology to the software development process. Instead, viewing software development as a craft emphasizes the skill of the craftsman (or woman.) We can also begin to establish rankings of "craft skill" with the general skills discussed above in the section on Engineering.

Apprentice

An apprenticeship is a system of training a new generation of practitioners of a trade or profession with on-the-job training and often some accompanying study (classroom work and reading). Apprenticeship also enables practitioners to gain a license to practice in a regulated profession. Most of their training is done while working for an employer who helps the apprentices learn their trade or profession, in exchange for their continued labor for an agreed period after they have achieved measurable competencies. Apprenticeships typically last 3 to 6 years. People who successfully complete an apprenticeship reach the "journeyman" or professional certification level of competence. (source)

Journeyman

A "journeyman" is a skilled worker who has successfully completed an official apprenticeship qualification in a building trade or craft. They are considered competent and authorized to work in that field as a fully qualified employee. A journeyman earns their license through education, supervised experience, and examination. [1] Although a journeyman has completed a trade certificate and is able to work as an employee, they are not yet able to work as a self-employed master craftsman.[2] The term journeyman was originally used in the medieval trade guilds. Journeymen were paid each day, and this is where the word ‘journey’ derived from- journée meaning ‘a day’ in French. Each individual guild generally recognized three ranks of workers; apprentices, journeymen, and masters. A journeyman, as a qualified tradesman could become a master, running their own business although most continued working as employees. (source)

Master Craftsman

A master craftsman or master tradesman (sometimes called only master or grandmaster) was a member of a guild. In the European guild system, only masters and journeymen were allowed to be members of the guild. An aspiring master would have to pass through the career chain from apprentice to journeyman before he could be elected to become a master craftsman. He would then have to produce a sum of money and a masterpiece before he could actually join the guild. If the masterpiece was not accepted by the masters, he was not allowed to join the guild, possibly remaining a journeyman for the rest of his life. Originally, holders of the academic degree of "Master of Arts" were also considered, in the Medieval universities, as master craftsmen in their own academic field. (source)

Where do we Fit in This Scheme?

While we all begin as apprentices and our "training" is often through the anonymity of books and the Internet as well as code camps, our peers, and looking at other people's code, we eventually develop various technical and interpersonal skills. And software development is somewhat unique as a tradecraft because we are always at different levels depending on the technology that we are attempting to use. So when we use the terms "senior developer," we're really making an implied statement about what skills we have that rank us at least as a journeyman. One of the common threads of the three levels of a tradecraft is that there is a master craftsman working with the apprentice and journeyman, and in fact certifies the person to move on to the next step. Also, becoming a master craftsman required developing a "masterpiece," which is ironic because I know a few software developers that consider themselves "senior" and only have failed software projects under their belt.

And as Donald Knuth wrote in his preface to The Art of Computer Programming: This book is...designed to train the reader in various skills that go into a programmer's craft."

Where we Fail

Analogies to the software development process includes code reviews, peer programming, and even employee vs. contractor or business owner. Unfortunately, the craft model of software development is not really adopted, perhaps because employers don't want to use a system that harks back at least to the medieval days. Code reviews, if they are done at all, can be very superficial and not part of a systematic development process. Peer programming is usually considered a waste of resources. Companies rarely provide the equivalent of a "certificate of accomplishment," which would be particularly beneficial when people are no longer in school and they are learning skills that school never taught them (the entire Computer Science degree program is questionable as well in its ability to actually producing, at a minimum, a journeyman in software development.)

Furthermore, given the newness of the software development field and the rapidly changing tools and technologies, the opportunity to work with a master craftsman is often hard to come by. In many situations, one can at best apply general principles gleaned from skills in other technologies to the apprentice work in a new technology (besides the time honored practice of "hitting the books" and looking up simple problems on Stack Overflow.) In many cases, the process of becoming a journeyman or master craftsman is a bootstrapping process with ones peers.

Software Development as an Art

(With apologies to the reader, but Knuth states the matter so eloquently, I cannot express it better.)

Quoting Donald Knuth again: "The process of preparing programs for a digital computer is especially attractive, not only because it can be economically and scientifically rewarding, but also because it can be an aesthetic experience much like composing poetry or music." and: "I have tried to include all of the known ideas about sequential computer programming that are both beautiful and easy to state."

So there is an aesthetic experience associated with programming, and programs can have the qualities of being beautiful, perhaps where the aesthetic experience, the beauty of a piece of code, lies in its ability to easily describe (or self-describe) the algorithm. In his excellent talk on Computer Programming as an Art (online PDF), Knuth states: "Meanwhile we have actually succeeded in making our discipline a science, and in a remarkably simple way: merely by deciding to call it 'computer science.'" He makes a salient statement about science vs. art: "Science is knowledge which we understand so well that we can teach it to a computer; and if we don't fully understand something, it is an art to deal with. Since the notion of an algorithm or a computer program provides us with an extremely useful test for the depth of our knowledge about any given subject, the process of going from an art to a science means that we learn how to automate something."

What a concise description of software development as an art - the process of learning something sufficiently to automate it! But there's more: "...we should continually be striving to transform every art into a science: in the process, we advance the art." An intriguing notion. He also quotes Emma Lehmer (11/6/1906 - 5/7/2007, mathematician), when she wrote in 1956 that she had found coding to be "an exacting science as well as an intriguing art." Knuth states that "The chief goal of my work as educator and author is to help people learn how to write beautiful programs...The possibility of writing beautiful programs, even in assembly language, is what got me hooked on programming in the first place."

And: "Some programs are elegant, some are exquisite, some are sparkling. My claim is that it is possible to writegrand programs, noble programs, truly magnificent ones!"

Knuth then continues with some specific elements of the aesthetics of a program:

- It of course has to work correctly.

- It won't be hard to change.

- It is easily readable and understandable.

- It should interact gracefully with its users, especially when recovering from human input errors and in providing meaningful error message or flexible input formats.

- It should use a computer's resources efficiently (but in the write places and at the right times, and not prematurely optimizing a program.)

- Aesthetic satisfaction is accomplished with limited tools.

- "The use of our large-scale machines with their fancy operating systems and languages doesn't really seem to engender any love for programming, at least not at first."

- "...we should make use of the idea of limited resources in our own education."

- "Please, give us tools that are a pleasure to use, especially for our routine assignments, instead of providing something we have to fight with. Please, give us tools that encourage us to write better programs, by enhancing our pleasure when we do so."

Knuth concludes his talk with: "We have seen that computer programming is an art, because it applies accumulated knowledge to the world, because it requires skill and ingenuity, and especially because it produces objects of beauty. A programmer who subconsciously views himself as an artist will enjoy what he does and will do it better."

Reading what Knuth wrote, one can understand the passion that was experienced with the advent of the 6502 processor and the first Apple II, Commodore PET, and TRS-80. One can see the resurrection of that passion with single board computers such as the rPi, Arduino, and Beaglebone. In fact, whenever any new technology appears on the horizon (for example, the Internet of Things) we often see the hobbyist passionately embracing the idea. Why? Because as a new idea, it speaks to our imagination. Similarly, new languages and frameworks ignite passion with some programmers, again because of that imaginative, creative quality that something new and shiny holds.

Where we Fail

Unfortunately, we also fail at bringing artistry to software development. Copy and paste is not artistic. An inconsistent styling of code is not beautiful. An operating system that produces a 16 byte hex code for an error message is not graceful. The nightmare of NPM (as an example) dependencies (a great example here) is not elegant, beautiful, and certainly is not a pleasure to use. Knuth reminds us that programming should for the most part be pleasurable. So why have we created languages like VB and Javascript that we love to hate, use syntaxes like HTML and CSS that are complex and not fully supported across all the flavors of browsers and browser versions, and why do we put up with them? But worse, why do we ourselves write ugly, unreadable, un-maintainable code? Did we ever even look at programming from an aesthetic point of view? It is unfortunate that in a world of deadlines and performance reviews we seem to have lost (if we ever had it to begin with) the ability to program artistically.

Conclusion: What Then is the Software Development Process?

Most software development processes attempt to replicate well-developed processes of working with nature and physical construction. This is the wrong approach. Software usually does not involve things where everyone can (usually) agree on the observations and "science." Rather, software development involves people (along with their foibles) and concepts (of which people often disagree.) One distinguishing aspect is when software is designed to control hardware (a thing) whether it's the cash dispenser of an ATM, a self-driving car, or a spacecraft. Because the "thing" is then well known, the software development process has a firm foundation for construction. However, much in software development lacks that firm foundation. The debate over which methodology is best is inappropriate because none of the so-called methodologies put forward over the years are actual methodologies. Discussing approaches and philosophy might be fun over a beer but does it really result in advancing the process?

In fact, the question of defining a software development process is wrong because there simply is no right answer. Emphasis should be placed on the skills of the developers themselves and how they transform the unknown into the known, with artistry. If anything, the software development process needs to be approached like a craft, in which skilled people mentor the less skilled and in which masters of the craft recognize, in some tangible manner, the "leveling up" of an apprentice or journeyman. The "process" is best described as a transformation of art into science through formalization and knowledge that can be shared with others. When in the process, the work that we do must be personally satisfying. While there is satisfaction in "it works," the true artisan finds personal satisfaction in also creating something that is aesthetically pleasing, whether it is (to name a few) in their coding style, writing a beautiful algorithm, or applying some technology or language feature elegantly to solve a complex problem. When we critique someone else's work, it is not sufficient to count how many unit tests pass. A true masterpiece includes the process as well as the code and the user experience, all of which should combine aspects of artistry and science. If we looked at software development in this way, we might eventually get to a better place, where processes can actually be called methodologies and with languages and tools that we can say were truly engineered.

What Then is A Senior Developer or a Software Engineer?

Perhaps: Someone who is senior or calls themselves a software engineer is able to apply scientific methods and has formal methodologies for their work process, demonstrates skill in the domain, tools, and languages, would be considered a master craftsman (ie, proven track record, ability to teach others, etc.) and also treats development as an art, meaning that it requires creativity, imagination, the ability to think outside of the box of said skills, and that those skills are applied with an aesthetic sense.